A few years ago, I worked with a mid-sized retail company that was convinced its sales problem was purely about pricing. Discounts weren’t working, ads felt random, and customer loyalty was slipping. The real issue wasn’t price—it was blindness. They had mountains of customer data sitting in different systems, but no way to connect or understand it. Once they adopted big data technology, patterns emerged almost immediately: buying behavior by region, timing, device usage, even weather-driven demand. Revenue followed clarity.

That’s the power of big data technology.

We live in a world where data is generated every second—by smartphones, sensors, websites, vehicles, transactions, and people. But raw data alone is useless. Big data technology is what turns that chaos into insight. It helps businesses predict outcomes, governments plan smarter cities, healthcare systems save lives, and creators understand their audiences better.

In this guide, I’ll walk you through big data technology the way I explain it to clients and teams in the real world—no hype, no jargon for the sake of sounding smart. You’ll learn what it actually is, why it matters, how it works, which tools are worth your time, common mistakes to avoid, and how to get started step by step. Whether you’re a business owner, student, analyst, or just curious, this article is built to give you clarity and confidence.

Understanding Big Data Technology (A Beginner-Friendly Explanation)

Big data technology sounds intimidating, but the core idea is surprisingly simple. It’s about handling data that is too large, too fast, or too complex for traditional tools like spreadsheets or basic databases.

A helpful way to think about it is water.

A glass of water is easy to drink—that’s small data. A swimming pool needs pumps and filters—that’s larger data. Now imagine an ocean with tides, storms, and currents. You don’t “drink” it; you study it with ships, satellites, and sensors. That ocean is big data, and big data technology is the entire infrastructure that allows you to navigate it safely and usefully.

Big data is usually defined by the “5 Vs”:

- Volume: Massive amounts of data, often in terabytes or petabytes

- Velocity: Data arriving in real time or near real time

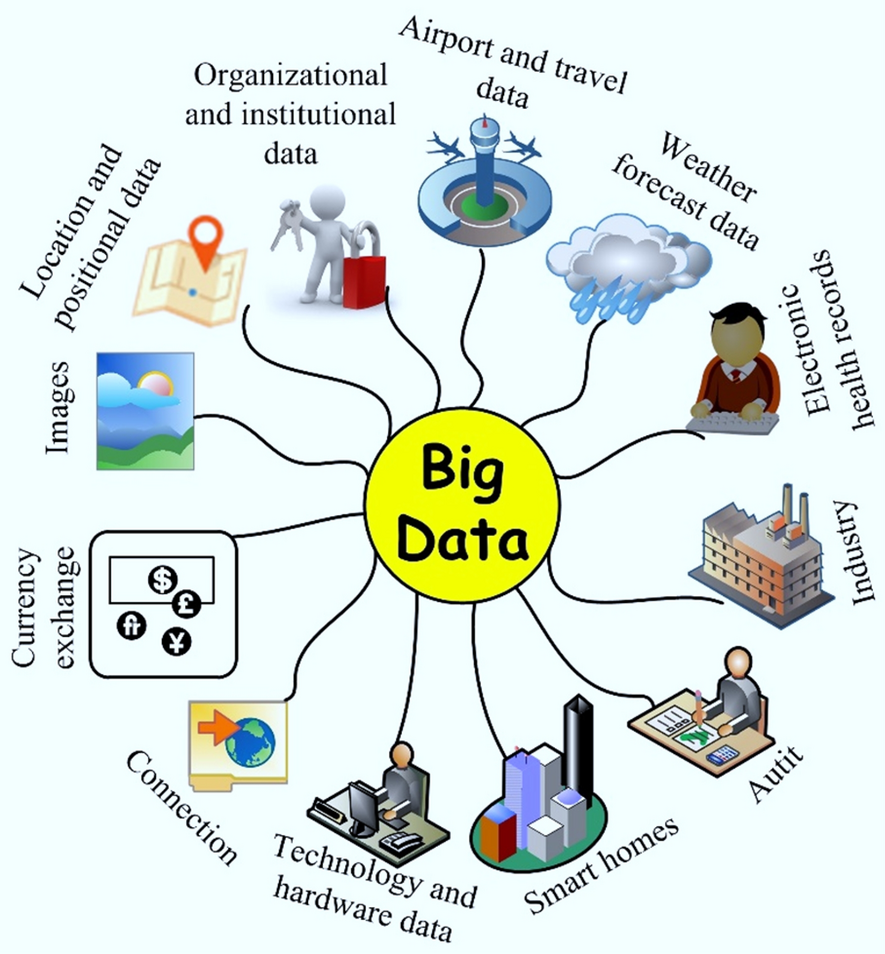

- Variety: Structured, semi-structured, and unstructured data (text, images, video, logs)

- Veracity: Data quality and trustworthiness

- Value: The insights you can extract

Big data technology includes storage systems, processing frameworks, analytics engines, and visualization tools that work together. Traditional databases break when faced with this scale. Big data systems are designed to distribute workloads across many machines, handle failures gracefully, and scale as data grows.

This is why companies like Netflix, Amazon, Uber, and Google don’t just “use data”—they live on big data technology.

Why Big Data Technology Matters More Than Ever Today

Ten years ago, collecting data was the challenge. Today, everyone has data. The real advantage comes from how quickly and intelligently you can use it.

Big data technology matters because it changes decision-making from reactive to proactive. Instead of guessing what customers want, organizations can predict behavior. Instead of responding to problems after they happen, systems can detect risks early.

Here’s what I’ve seen repeatedly in practice:

Companies without big data rely on intuition and delayed reports.

Companies with big data rely on patterns, probabilities, and real-time feedback.

The difference shows up in:

- Faster decision cycles

- Lower operational costs

- Better customer experiences

- More accurate forecasting

- Stronger competitive advantage

It also matters because data volumes aren’t slowing down. IoT devices, AI systems, social platforms, and remote work tools are generating more data than ever. Without big data technology, most of that information is wasted.

In short, big data technology isn’t a “tech trend.” It’s infrastructure—like electricity or the internet. You don’t notice it until you don’t have it.

Core Components of Big Data Technology (How the System Actually Works)

4

Big data technology isn’t a single tool. It’s an ecosystem. Understanding the core components helps you see how everything fits together.

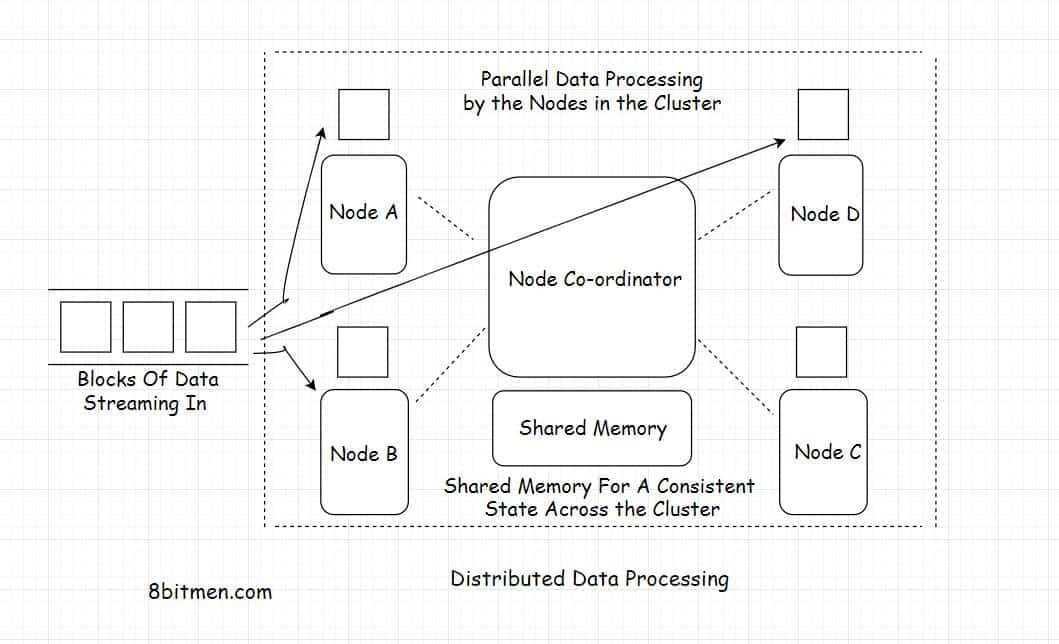

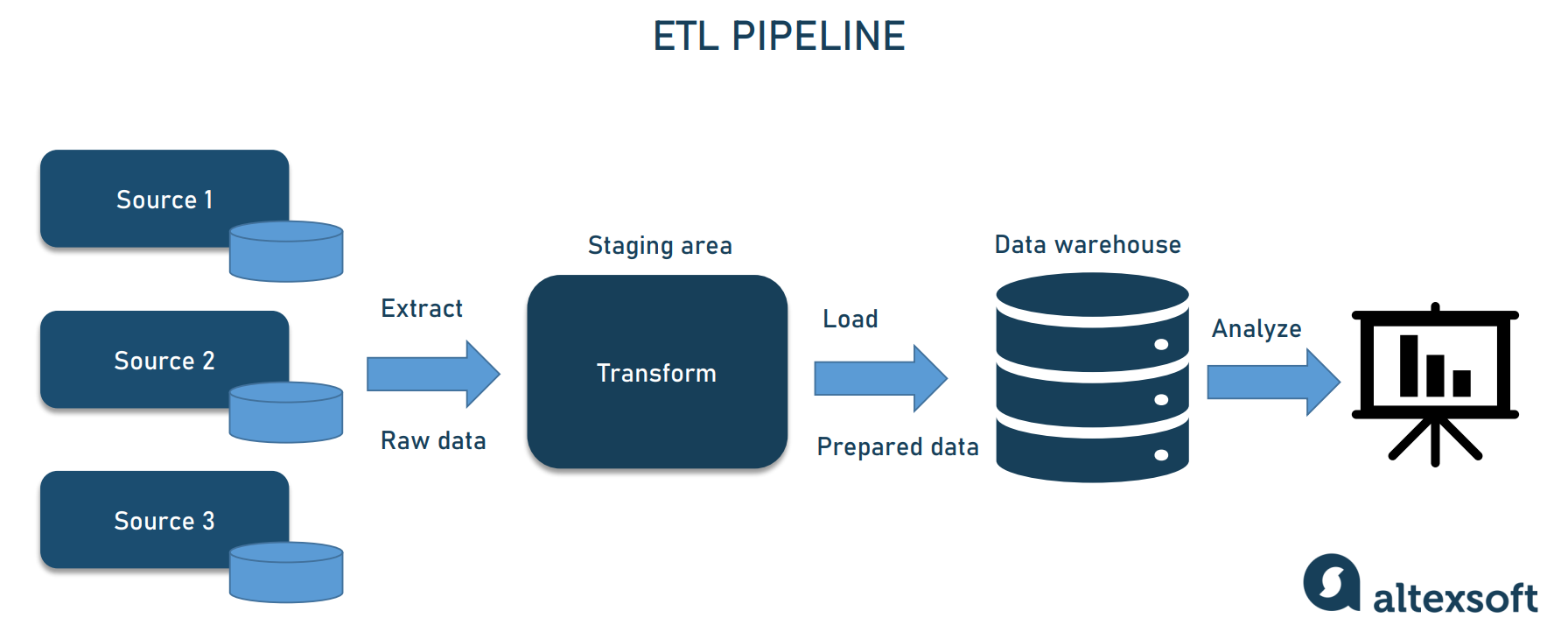

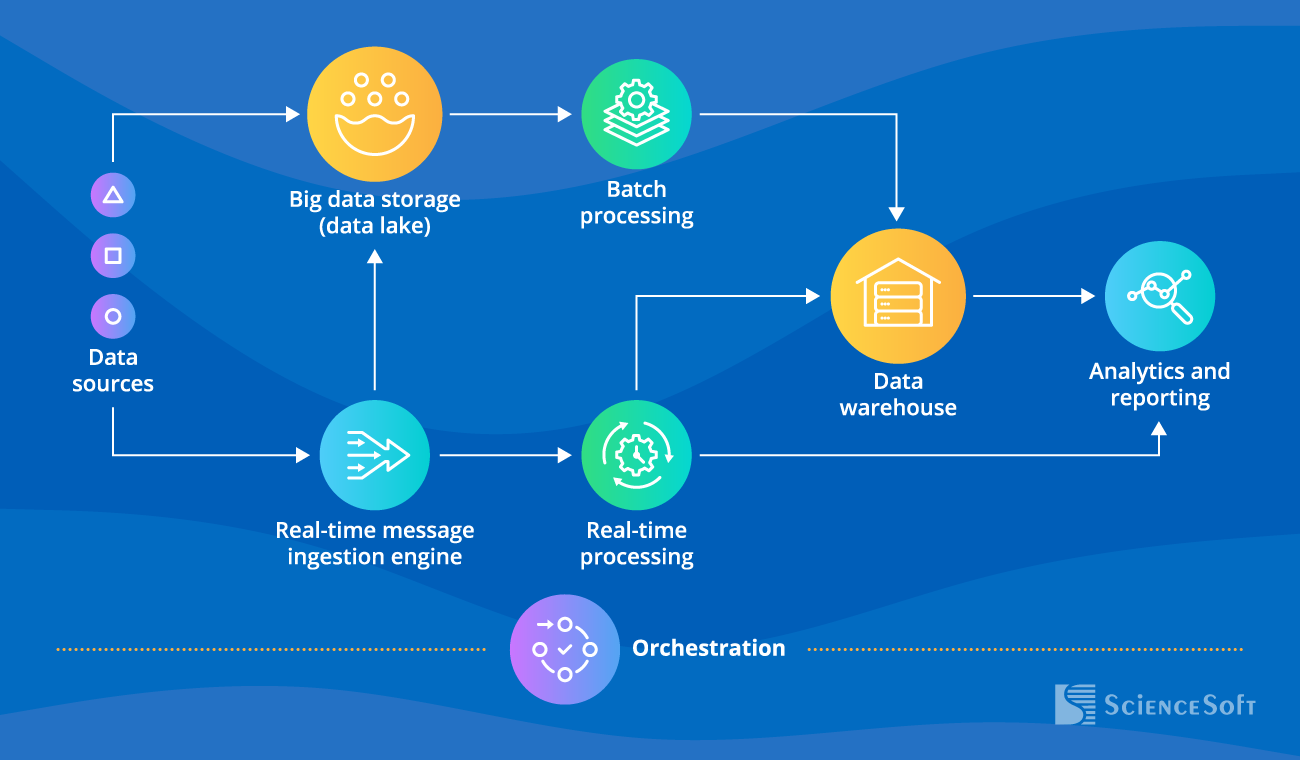

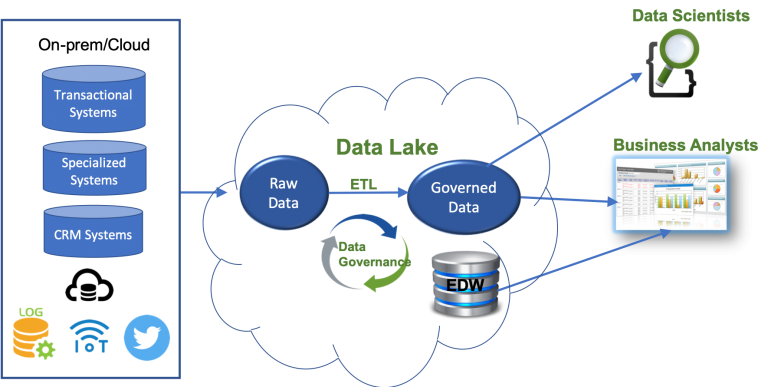

At a high level, big data systems follow a pipeline:

Data ingestion → Storage → Processing → Analytics → Visualization

Data ingestion is how data enters the system. This can be batch data (uploaded daily logs) or streaming data (real-time events from apps or sensors). Tools in this layer are built to handle high-speed inputs without breaking.

Storage is where big data really differs from traditional databases. Instead of one big server, data is spread across many machines. This makes systems cheaper, faster, and more fault-tolerant.

Processing is where raw data becomes usable. Distributed processing frameworks break large tasks into smaller ones and run them in parallel. This is how systems analyze billions of records efficiently.

Analytics and machine learning layers extract meaning. This includes querying data, building predictive models, detecting anomalies, and generating insights.

Visualization and reporting turn insights into something humans can actually understand. Dashboards, charts, and alerts are often the final step—but they’re useless without everything before them working properly.

When all these layers are designed well, big data technology feels invisible. You ask a question, and the answer appears quickly—even if it’s based on years of historical data.

Benefits and Real-World Use Cases of Big Data Technology

The benefits of big data technology become obvious when you see it in action. Below are real-world use cases I’ve encountered or studied closely.

In business and e-commerce, big data helps companies personalize experiences. Recommendation engines analyze past behavior, browsing patterns, and contextual data to suggest products customers are actually likely to buy. This isn’t guesswork—it’s probability at scale.

In healthcare, big data technology supports early diagnosis and treatment planning. By analyzing patient histories, lab results, and population-level trends, hospitals can identify risk factors earlier and allocate resources more effectively.

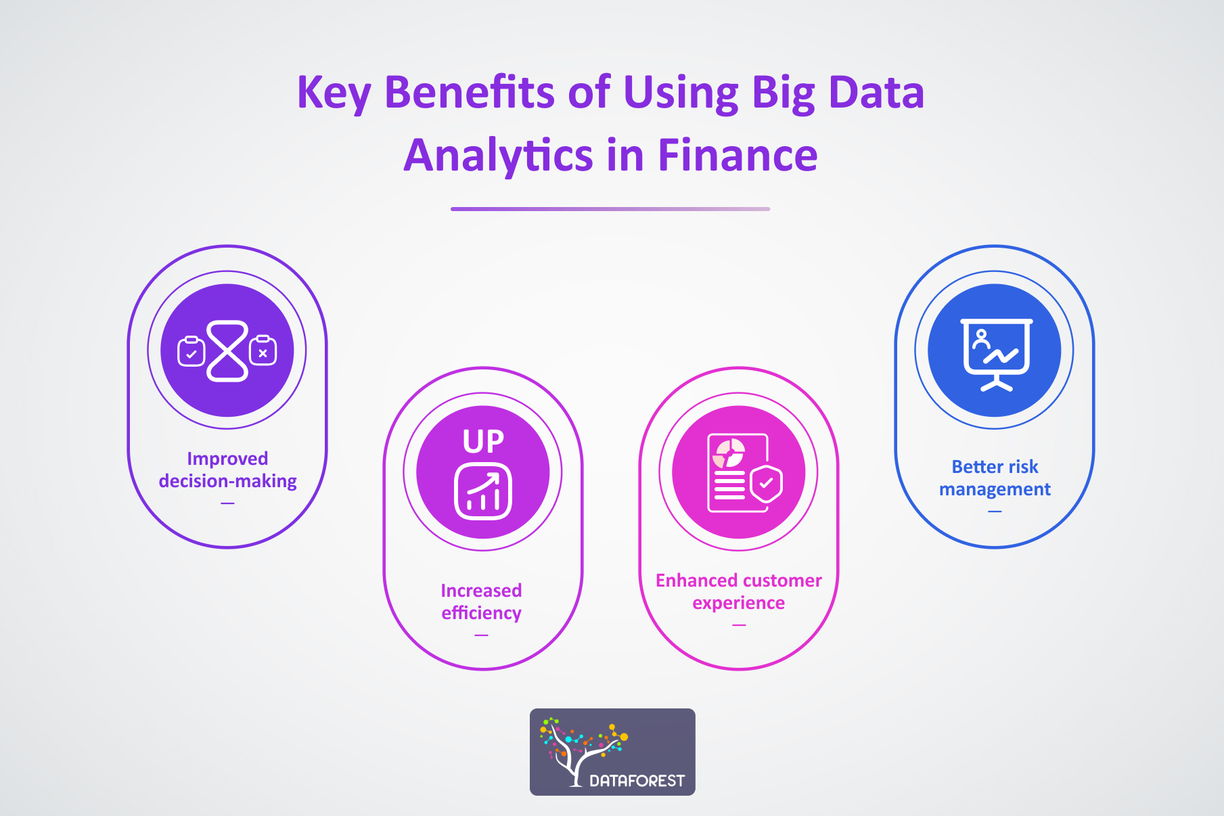

In finance, fraud detection systems analyze millions of transactions in real time. Suspicious patterns trigger alerts within seconds, preventing losses before they escalate.

In logistics and transportation, big data optimizes routes, predicts maintenance needs, and reduces fuel consumption. Fleet managers don’t just track vehicles—they anticipate issues.

In marketing, big data reveals what content works, when audiences engage, and how campaigns influence behavior across channels. Decisions become measurable instead of emotional.

The common thread is this: big data technology turns hindsight into foresight.

Step-by-Step Guide: How Big Data Technology Is Implemented in Practice

Implementing big data technology doesn’t start with tools—it starts with questions. This is where many teams go wrong.

Step one is defining the problem. What decision are you trying to improve? Revenue forecasting? Customer retention? Operational efficiency? Without a clear goal, data becomes noise.

Step two is identifying data sources. This includes internal systems (CRM, ERP, logs) and external sources (APIs, third-party datasets). At this stage, you assess data quality, frequency, and relevance.

Step three is choosing the right architecture. Cloud-based solutions are often best for scalability, but hybrid or on-prem setups may make sense for compliance-heavy industries.

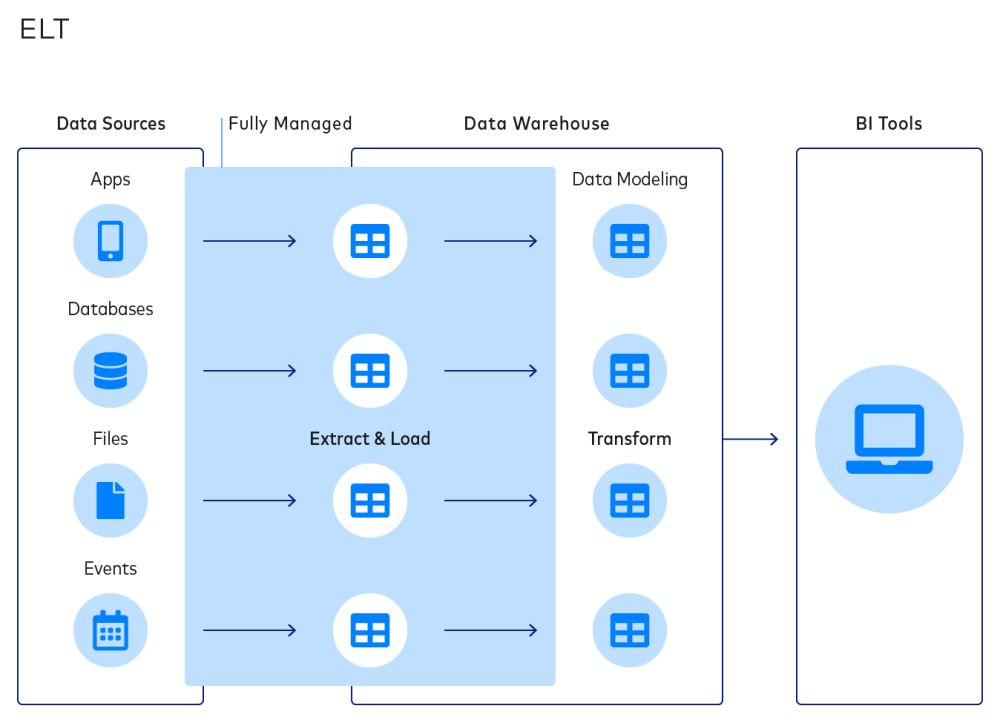

Step four is building the data pipeline. Data ingestion tools pull information in, transform it into usable formats, and store it efficiently. Automation here saves enormous time later.

Step five is analytics and modeling. Analysts and data scientists explore the data, create dashboards, and build predictive models aligned with business goals.

Step six is iteration. Big data technology isn’t “set and forget.” Models improve, data sources change, and insights evolve.

The most successful implementations I’ve seen treat big data as a living system, not a one-time project.

Tools, Platforms, and Technology Choices (What Actually Works)

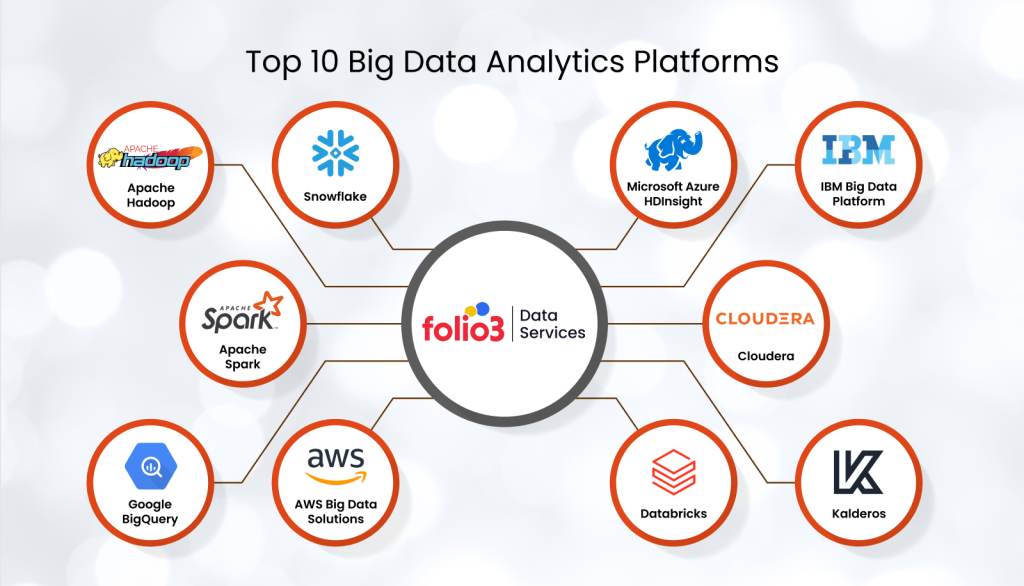

There’s no shortage of tools in the big data ecosystem. The challenge isn’t availability—it’s choosing wisely.

For storage and processing, frameworks like Apache Hadoop pioneered distributed data handling. Today, many systems build on or integrate with Hadoop-based architectures.

For fast, in-memory analytics, Apache Spark is widely used. It excels at iterative processing, machine learning workloads, and real-time analytics.

Cloud platforms such as AWS, Google Cloud, and Azure offer managed big data services that reduce infrastructure headaches. These are excellent for teams without deep DevOps resources.

For analytics and visualization, tools like Tableau, Power BI, and Looker help translate complex data into human-readable insights.

Free tools are great for learning and experimentation. Paid platforms often shine in scalability, support, and compliance. The right choice depends on team size, budget, and goals—not hype.

Common Mistakes in Big Data Technology (And How to Fix Them)

One of the biggest mistakes is collecting data without purpose. I’ve seen organizations build massive data lakes that nobody uses. Data should serve questions, not the other way around.

Another mistake is ignoring data quality. Big data doesn’t magically fix bad data. In fact, it amplifies errors if left unchecked. Validation and cleaning are non-negotiable.

Overengineering is also common. Teams sometimes adopt complex stacks before mastering basics. Start simple, then scale.

A final mistake is treating big data as purely technical. Success requires collaboration between business stakeholders, analysts, and engineers. When only one group owns the system, value gets lost.

Fixing these issues usually comes down to alignment, discipline, and patience.

The Future of Big Data Technology (What’s Coming Next)

Big data technology is increasingly converging with artificial intelligence. Real-time analytics, automated decision systems, and self-healing data pipelines are becoming standard.

Edge computing is pushing analytics closer to data sources, reducing latency. Privacy-preserving techniques are gaining importance as regulations evolve.

What won’t change is the core principle: data is only powerful when it informs action.

Conclusion: Turning Big Data Technology Into Real Advantage

Big data technology isn’t about size—it’s about insight. It’s about asking better questions, seeing patterns earlier, and making decisions with confidence instead of guesswork.

If there’s one takeaway from this guide, it’s this: start with purpose, grow with discipline, and treat data as a strategic asset—not a technical burden.

If you’re already using big data technology, refine it. If you’re just starting, begin small but intentional. And if you’re stuck, revisit your questions before buying another tool.

If this guide helped clarify things, explore related topics, test a tool, or share your experience—data stories are always better when shared.

FAQs

What is big data technology in simple terms?

Big data technology refers to tools and systems that store, process, and analyze extremely large and complex datasets efficiently.

How is big data different from traditional databases?

Traditional databases struggle with scale and speed, while big data systems distribute workloads across many machines.

Who should use big data technology?

Businesses, researchers, governments, and organizations dealing with large or fast-moving datasets benefit most.

Is big data only for large companies?

No. Cloud platforms make big data accessible to startups and small teams as well.

What skills are needed to work with big data?

Basic data analysis, SQL, distributed systems knowledge, and business understanding are key.

Adrian Cole is a technology researcher and AI content specialist with more than seven years of experience studying automation, machine learning models, and digital innovation. He has worked with multiple tech startups as a consultant, helping them adopt smarter tools and build data-driven systems. Adrian writes simple, clear, and practical explanations of complex tech topics so readers can easily understand the future of AI.